Below are Joshua’s notes for folks interacting with his new SharePoint mechanisms for implementing a workflow for our Dec 18 review process.

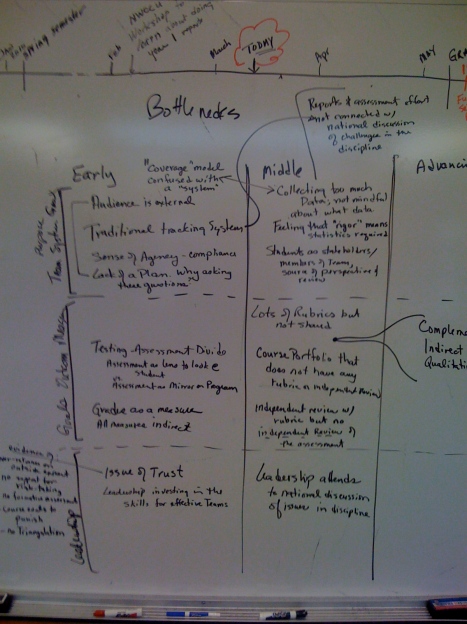

The attached image is from Thurs 14 (last week) where we diagrammed out the steps. Joshua implemented a trial mechanism to capture the remaining Dec 18 work as a test run for the May 17 work we are anticipating. Ashley has started populating that structure in Assessment.wsu.edu

In the figure, there is an agenda associated with the meeting and to the right of it, in blue and red, in Nils hand, a set of steps flowing down and to the right and an associated numbered list of steps in red.

—— Forwarded Message

From: Joshua Yeidel

Date: Tue, 19 Jan 2010 14:17:04 -0800

—————–DRAFT—————————–

Here are some tips for making successful use of the internal workspace for the 2009 December Planning document reviews:

There are two components: a “Process Documents” library for non-public documents related to program reviews, and a “Process Actions” list which identifies what the next action is for each program’s review, and to whom the action is assigned. Both components can be accessed via links in the left-hand navigation bar.

When a new review comes in, if it doesn’t already have one, create a “New Folder” with the program’s name in the “Process Documents” library. This folder will be used for any internal (non-public) documents related to the review (e.g., draft responses). Public documents (such as the original submission) go in the “2009-2010” site on UniversityPortfolio

ALSO create a new tracking item with the program name in the “Process Actions” list. Set the “Next Action” and “Assigned To” fields appropriately. This will cause an email to be sent to the new assignee. To identify the “Assigned To” person, Enter the person’s WSU network ID or “lastname,firstname”, then click the checkmark icon on the right end of the text box to “Check Names”. The server will replace the id or name with an underlined name, meaning that a match for that entry has been found. If no match is found (usually due to a typo), and you are using Internet Explorer, you can right-click on the unmatched entry for further search options.

Each time an action is completed, update the Process Actions item via the “Edit Item” button or drop-down menu item. Change the “Next Action”, “Assigned To” and “Instructions” fields as needed. This will cause an email to the assignee in which those fields are listed.

If you don’t know what the next action for a review should be, you can select “Figure out next action” at the bottom of the Next Actions pick-list, and assign it to a director.

You may assign a review to more than one person, but “Next Action” can have only one value at a time. (For example in cases where raters need to reconcile divergent ratings.)

Each “Process Action” item includes a “Log Notes” field which is cumulative. When you make an entry, it is displayed at the top of a list of previous “Log Notes” entries for that item. Use this for any information that is important to track (e.g., “rating cannot be completed until Joshua returns on 1/25/2010”. There is no need for log notes of routine information.

Please let me know if you have successes or if you experience any difficulty. This is a pilot of a tracking method we may use for the May cycle, so please surface issues so they can (hopefully) be addressed.

— Joshua

—— End of Forwarded Message

Filed under: Uncategorized | Tagged: Reflection/Next Step | Leave a comment »