This post demonstrates harvesting rubric-based feedback in a course, and how the feedback can be used by instructors and programs, as well as students. It is being prepared for a Webinar hosted by the TLT group. (Update 7/28: Webinar archive here. Minutes 16-36 are our portion. Minutes 24-31 are music while participants work on the online task. This is followed by Terry Rhodes of AAC&U with some kind comments about how the WSU work illustrates ideas in the AAC&U VALUE initiative. Min 52-54 of the session is Rhodes’ summary about VALUE and the goal of rolling up assessment from course to program level. This process demonstrates that capability.)

Webinar Activity (for session on July 28) Should work before and after session, see below.

- Visit this page (opens in a new window)

- On the new page, compete a rubric rating of either the student work or the assignment that prompted the work.

Pre/Post Webinar

If you found this page, but are not in the webinar, you can still participate.

- Visit the page above and rate either the student work or assignment using the rubric. Data will be captured but not be updated for you in real time.

- Explore the three tabs across the top of the page to see the data reported from previous raters.

- Links to review:

Discussion of the activity

The online session is constrained for time, so we invite you to discuss the ideas in the comment section below. There is also a TLT Group “Friday Live” session being planned for on Friday Sept 25, 2009 where you can join in a discussion of these ideas.

In the event above, we demonstrated using an online rubric-based survey to assess an assignment and to assess the student work created in response to the assignment. The student work, the assignment, and the rubric were all used together in a course at WSU. Other courses we have worked with have assignments and student products that are longer and richer, we chose these abbreviated pieces for pragmatic reasons, to facilitate a rapid process of scoring and reporting data during a short webinar.

The process we are exploring allows feedback to be gathered from work in situ on the Internet (e.g., a learner’s ePortfolio), without requiring work be first collected into an institutional repository. Gary Brown coined the term “Harvesting Gradebook” to describe the concept, but we have come to understand that the technique can “harvest” more than grades, so a better term might be “harvesting feedback.”

This harvesting idea allows a mechanism to support community-based learning (see Institutional-Community Learning Spectrum). As we have been piloting community-based learning activities from within a university context, we are coming to understand that it is important to assess student work and assignments and the assessment instruments.

Importance of focusing assessments on Student Work

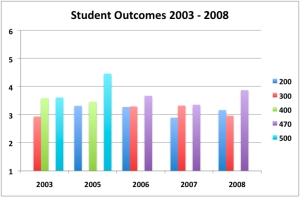

Gathering input on student projects provides the students with authentic experiences, maintains ways to engage students in authentic communities, helps the community consider new hires, and gives employers the kind of interaction with students that the university can capitalize when asking for money. But, we also have come to understand that assessing student learning often yields little change in course design or learning outcomes, Figure 1. (See also http://chronicle.com/news/article/6791/many-colleges-assess-learning-but-may-not-use-data-to-improve-survey-finds?utm_source=at&utm_medium=en )

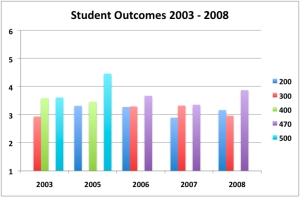

Figure 1. In the period 2003-2008 the program assessed student papers using the rubric above. Scores for the rubric dimensions are averaged in this graph. The work represented in this figure is different than the work being scored in the activity above. The “4” level on the rubric was determined by the program to be competency for a student graduating from the program.

The data in Figure 1 come from the efforts of a program that has been collaborating with CTLT for five years. The project has been assessing student papers using a version of the Critical Thinking Rubric tailored for the program’s needs.

Those efforts, measuring student work alone, did not produce any demonstrable change in the quality of the student work (Figure 1). In the figure, note that:

- Student performance does not improve with increasing course level, eg 200,300,400-level within a given year

- Only one time were students judged to meet the competency level set by the program itself (2005 500-level)

- Across the years studied, student performance within a course level did not improve, e.g., examine the 300-level course in 2003, 2006, 2007, 2008

Importance of focusing assessments on Assignments

Assignments are important places for the wider community to give input, because the effort the community spends assessing assignments can be leveraged across a large group of students. Additionally, if faculty lack mental models of alternative pedagogies, assignment assessment helps focus faculty attention on very concrete strategies they can actually use to help students improve.

The importance of assessing more than just student work can be seen in Figure 1. As these results unfolded, we suggested to the program that it focus attention on the assignment design. They just did not follow through as a program in reflecting on and revising the assignments, nor did they follow through with suggestions to improve communication of the rubric criteria with students.

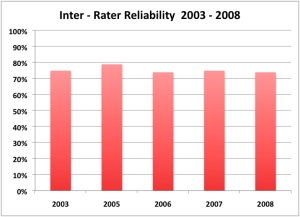

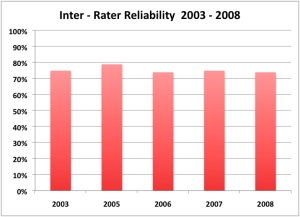

Figure 2 shows the inter-rater reliability from the same program. Note that the inter-rater reliability is 70+% and is consistent year to year.

Graph of inter-rater reliability data

This inter-rater reliability is borderline and problematic because, when extrapolated to high stakes testing, or even grades, this marginal agreement speaks disconcertingly to the coherence (or lack there of) of the program.

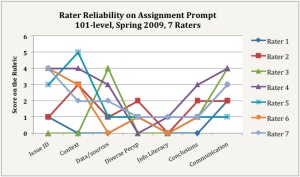

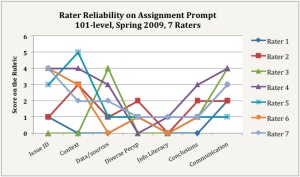

Figure 3 comes from a different program. It shows faculty ratings (inter-rater reliability) on a 101-level assignment and provides a picture of the maze, or obstacle course, of faculty expectations that students must navigate. Higher inter-rater reliability would be indicative of greater program coherence and should lead to higher student success.

Importance of focusing assessments on Assessment Instruments

Our own work and Allen and Knight (table 4) have found that faculty and professionals place different emphasis on the importance of criteria used to assess student work. Assessing the instrument in a variety of communities offers the chance to have conversations about the criteria and address questions of the relevance of the program to the community.

Summary

The intention of the triangulated assessment demonstrated above (assignment, student work and assessment instrument) is to keep the conversation about all parts of the process open to develop and test action plans that have potential to enhance learning outcomes. We are moving from pilot experiments with this idea to strategies to use the information to inform program-wide learning outcomes and to feed that data into ongoing accreditation work.

Filed under: Uncategorized | Tagged: assessment, COP, ePortfolio, gradebook, open learning, TLTGroup | 9 Comments »